Upgrading code-server from 3.4.1 to 3.5.0 was always supposed to be easy. Turns out it was easy.

Just for the sake of sharing the ease, I’ve written up the embarrassingly simple process.

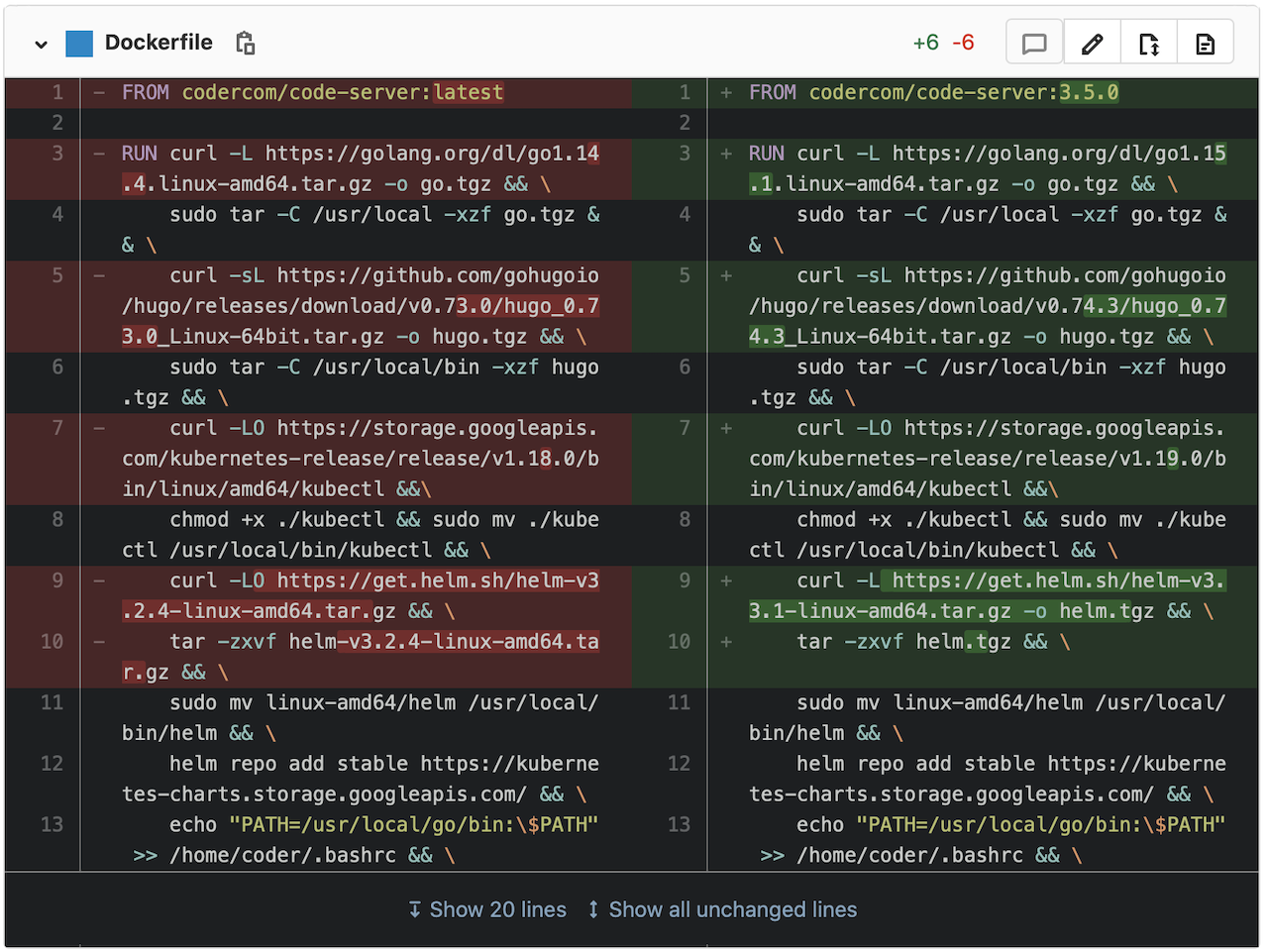

First, update my build tools image that wraps the code-server image.

Since the pipeline tags the image using the short SHA, before merging into the master branch I tested the spec with that version.

Updated my code-server.yml file with the new image tag and applied it.

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: code-server

name: code-server

namespace: development

spec:

selector:

matchLabels:

app: code-server

replicas: 1

template:

metadata:

labels:

app: code-server

spec:

imagePullSecrets:

- name: gitlabmjt

containers:

- image: registry.gitlab.com/mterhar/code-server-buildtools:ead46c16

imagePullPolicy: Always

name: code-server

[ . . .]

kubectl apply -f code-server.yml

[ . . . ]

ingress.networking.k8s.io/code-server unchanged

ingress.networking.k8s.io/code-server-hugo unchanged

service/code-server-hugo unchanged

service/code-server unchanged

persistentvolumeclaim/code-server-pvc unchanged

deployment.apps/code-server configured

First reload of Code-Server web application

- It forgot my dark theme, had to manually update that configuration

- It noticed the version of Go was updated and updated the plugin to match, all automated

- I manually validated the versions of the other installed tools

coder@code-server-7fd78db574-cxzzq:~/www-brownfield-dev$ go version

go version go1.15.1 linux/amd64

coder@code-server-7fd78db574-cxzzq:~/www-brownfield-dev$ kubectl version

Client Version: version.Info{Major:"1", Minor:"19", GitVersion:"v1.19.0", GitCommit:"e19964183377d0ec2052d1f1fa930c4d7575bd50", GitTreeState:"clean", BuildDate:"2020-08-26T14:30:33Z", GoVersion:"go1.15", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"18", GitVersion:"v1.18.5", GitCommit:"e6503f8d8f769ace2f338794c914a96fc335df0f", GitTreeState:"clean", BuildDate:"2020-06-26T03:39:24Z", GoVersion:"go1.13.9", Compiler:"gc", Platform:"linux/amd64"}

coder@code-server-7fd78db574-cxzzq:~/www-brownfield-dev$ helm version

version.BuildInfo{Version:"v3.3.1", GitCommit:"249e5215cde0c3fa72e27eb7a30e8d55c9696144", GitTreeState:"clean", GoVersion:"go1.14.7"}

coder@code-server-7fd78db574-cxzzq:~/www-brownfield-dev$ hugo version

Hugo Static Site Generator v0.74.3-DA0437B4 linux/amd64 BuildDate: 2020-07-23T16:22:34Z

Write a blog post… this one

So now I’m cranking away at a nice new blog post using this image. Super smooth.

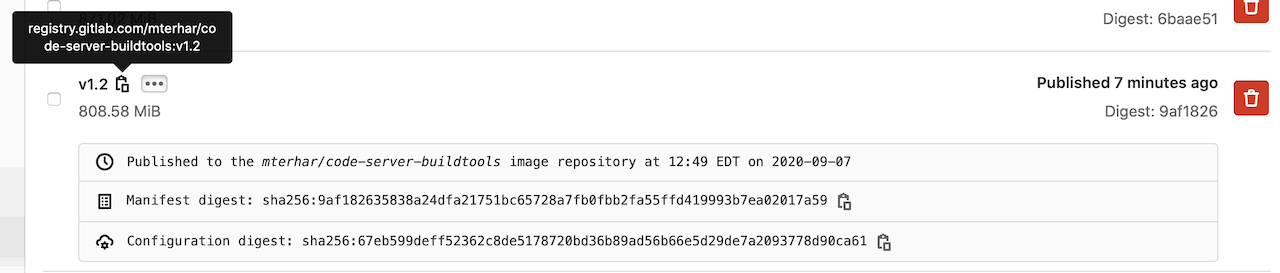

Tag the code-server-buildtools commit

Now I need to tag the code-server-buildtools commit so that it will say v1.2 and everyone can enjoy this new image.

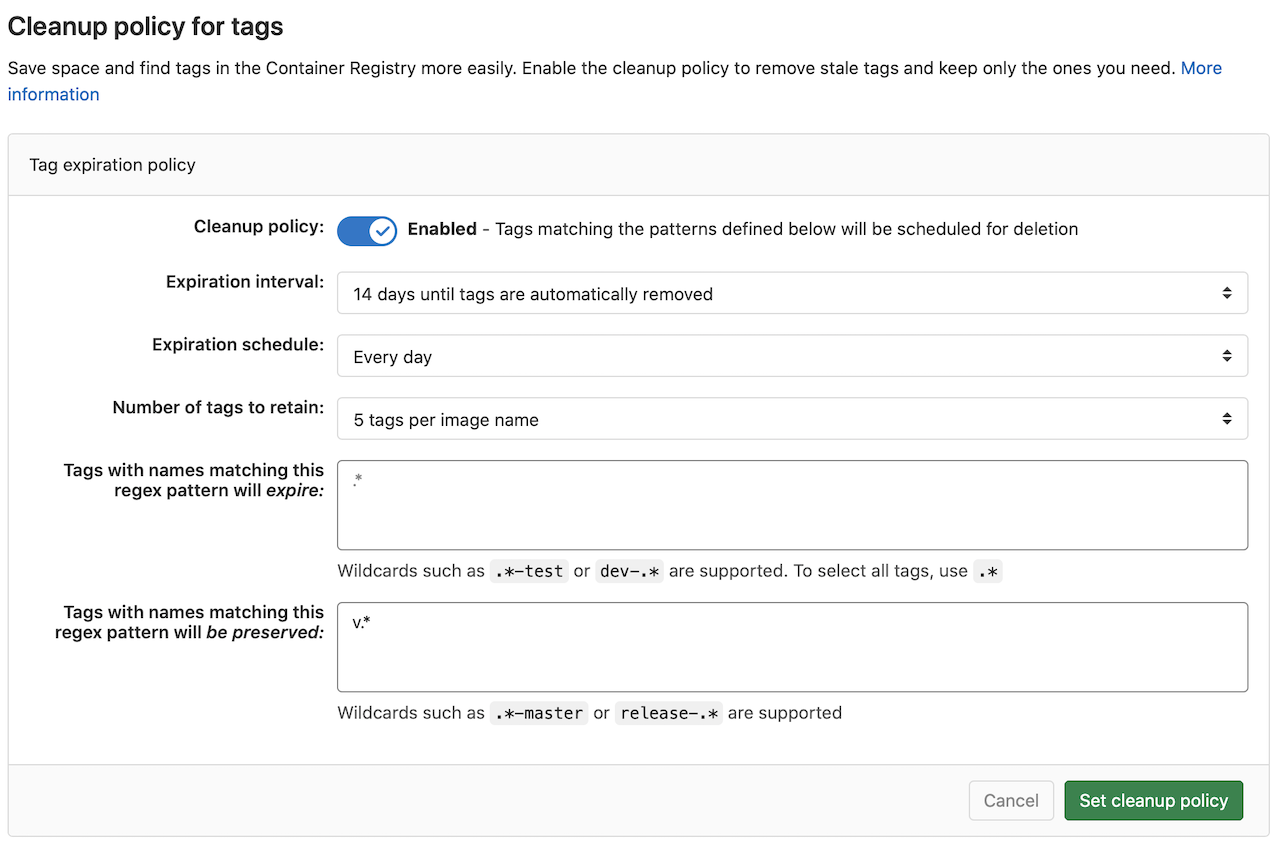

And while I’m in here, might as well configure the project to clean up the old docker image tags.

Check for quality of life fixes

Allegedly the clipboard issue was fixed and closed, but it’s not different on my iPad with the keyboard. Still need to do the “cmd+f” trick to get text into the find field if I want to copy it out and paste it elsewhere.

The rest seem about the same as they ever were as well. Will make an update to this if things get different.

Why not Helm?

I am not sure I understand the value of Helm as it currently exists. Text management is pretty easy using a text editor and git. Having a slightly longer yaml spec and using the Kubernetes native ways of referencing things is pretty easy and leads to obvious outcomes.

Helm hides some of this complexity by making a huge mess of all the specs and replacing things with other things. If this were a 20 pod architecture, maybe it would make sense. As it stands, I don’t see the value of adding that so I won’t.

What I may do is use Kudo to make an operator to keep this pod healthy and manage the upgrades for you. Operators aren’t just a yaml hider. They actually bring some reactive behaviors and monitoring things. That would possibly be helpful, though honestly this deployment spec has been rock solid for me so far. I even upgraded kubernetes under it from 17.9 to 18.5 and it blipped when it was shifted from one node to the other but was back up before I even looked for a status.

Feedback welcome!

Always!